> [!quote] [Wikipedia Definition](https://en.wikipedia.org/wiki/Moore%27s_law)

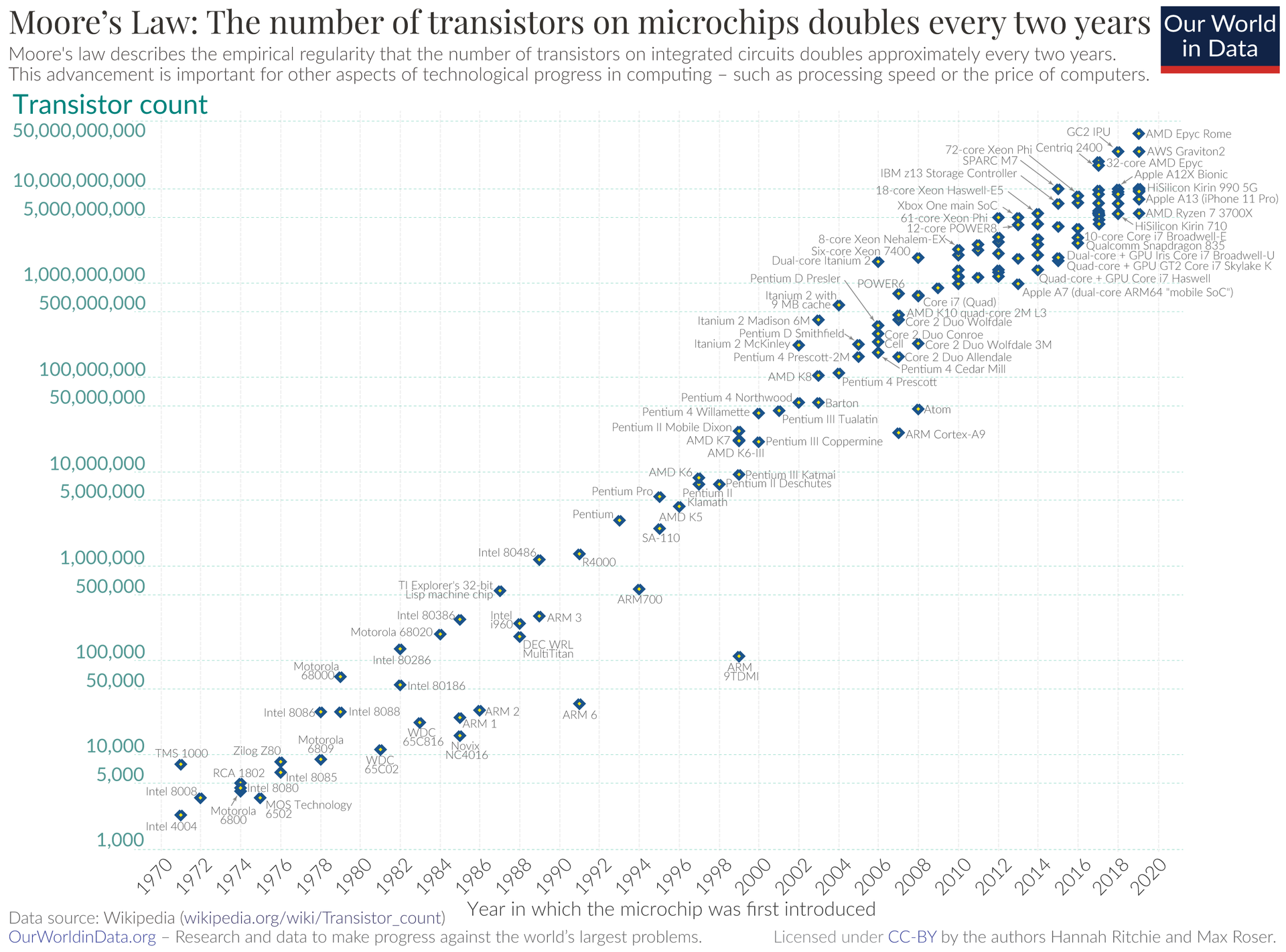

> **Moore's law** is the observation that the number of transistors in an integrated circuit (IC) ==doubles about every two years==. Moore's law is an observation and projection of a historical trend.

# End of Moore's Law

💥 This was a **boogyman** that loomed over us during the 2000s. It was a neo-[[Malthusianism]] fear (computing growth stagnating while computer demand ever growing) that captivated us all.

**Examples:**

* [[Coders at Work]] talked about this extensively.

* [[Asynchronous Programming#[C10k problem](https://en.wikipedia.org/wiki/C10k_problem)|C10k problem]] was the major problem statement that motivated [[Node.JS|Node.js]].

* Everyone thought we needed to change how we program to take advantage of the multi-core world. Few popular ones;

* Actor Model

* STM - Software Transactional Memory

## A *Revisionist* Moore's Law

During the 2010s, we lived under the *revised* Moore's law. Moore's law slowed down but it didn't grind to a halt.

* Doubling period has slowed down, but the semiconductor density is still increasing.

* Transistor density no longer translates to the single-core performance, but increase in the number of cores.

* However, single core performance still grew and common applications benefited from it.

* Computing got cheaper over time.

## Historical Trend

[Our World in Data](https://ourworldindata.org/moores-law)

# Why this hasn't impacted us

However, this end of Moore's Law didn't impact us in 2010s. What's even more peculiar is that we're not even talking about it anymore. This feels similar to the *peak oil*. We talked more about the peak oil in the 1990s than 2020s.

### Software and Hardware are good enough

Raw thesis - unlike 1990s, or even early 2000s, the rapid increase in computing had diminishing return. This is drastically different than 1980s when say, personal computers couldn't hold all kanji characters in RAM thus clever hacks needed to be created.

We're finding more and more clever ways to utilize the computing resource - high DPI screens, browser-based applications, videos with higher resolution and bitrate - but they are less groundbreaking than the changes we faced before. From the raw capabilities perspective, people were still able to write business reports with MS Word 97.

### Rise of Mobile

From the software engineering's perspective, the rise of mobile temporarily regressed the Moore's law as the utilizable computing power decreased.

Initially, mobile devices were less computationally capable thus softwares had to be more efficient. Even when they caught up, power consumption was still a concern and softwares were still incentivized to stay efficient.

### Rise of Hardware Acceleration

In the 2000s, the idea of *efficient code* was a tight loop written in assembly. 2020s is a different world. Performance sensitive code is now hardware accelerated[^hackersdelight]. Such a hardware acceleration is heavily specialized - both in terms of the logic we want to perform (ex: decoding a video) and hardware required to do so.

[^hackersdelight]: Even in the 2000s, assembly meant AVX code, not copying a snippet from the *Hacker's Delight*.

**Examples:**

* **Video encoding / decoding** - Hardware accelerated decoding is especially important for the power-efficient mobile devices.

* **UI/UX** - is now hardware accelerated. Nowadays, *pure 2D graphics* does not exist as they are treated as a special case of 3D graphics.

* Rise of **GPU and GPGPUs** - [HPC](https://en.wikipedia.org/wiki/High-performance_computing) space is dominated by the GPUs. Unfortunately it is hard to know the exact computing power ratio between GPUs and CPUs in the [TOP500](https://en.wikipedia.org/wiki/TOP500) list.

* Nvidia CEO Jensen Huang - [Ai's iPhone Moment](https://stratechery.com/2023/an-interview-with-nvidia-ceo-jensen-huang-about-ais-iphone-moment/)

* Rise of the **FPGA / ASICs**

* [AI Accelerators](https://en.wikipedia.org/wiki/AI_accelerator) / [Deep Learning Processors](https://en.wikipedia.org/wiki/Deep_learning_processor). Google Deepmind showed its potential via AlphaGo and its Tensor processor. Smartphone manufacturers are shipping phones with *neural engines*.

* **High performance IO**

> [!TODO] learn more about this topic

* IO devices had super-Moore's law development. Memory and network bandwidth in particular.

* Software abstractions (ex: syscall overhead) became a real problem to fully utilize these hardwares.

* Special programming models (userland networking, CPU-less memory copying, memory-mapped devices) were needed.

Finally, software matured to harness these capabilities without burdening their internals to developers. Certain capabilities (video decoding, UI/UX) are hardware accelerated by the virtue of using the right SDK. As long as you're invoking the right function, you're set. Frameworks such as Numpy, PyTorch, and others provide building blocks to write efficient code in high level environments.

Also note that these problems are quite homogeneous - using video decoding as an example, after the problem is articulated, further improvements are about pushing more bytes and pixels - higher resolution, higher color depth, more frames, and better algorithms to handle it all. Due to its nature, using a library that takes advantage of ever-improving hardware acceleration is the right long term decision both for the application and framework developers.

> [!note] On Intel

> Note that this is all terrible to Intel as they were dependent on Moore's Law - or more specifically, they benefited when the industry was dependent on Moore's Law continuing. However, Intel is now stagnant and the industry is perfectly fine with it as other hardware advancements impact us more.

### Rise of Server-side programming

And the perils of [[Scalability]]...

On the server side, the growth of the internet (and the individual product) was astronomically faster than the Moore's Law. One couldn't depend on the Moore's law to achieve the web scale.

Many ideas had to be invented to deal with the multi-server world. This world is inherently concurrent and parallel and many methodologies were invented to deal with this complexity. Google's classical papers, starting from the *MapReduce* provided the foundation for it.

However, we don't even need such an advanced example. Even the industry best practice of "multiple stateless application servers under a load balancer" made the [Shared Nothing Architecture](https://en.wikipedia.org/wiki/Shared-nothing_architecture) mainstream. In this world, the software is already abstracted away from the Moore's Law.

> [!todo] An Observation

> Stateless and shared-nothing architecture became *too* mainstream, and now we're too scared to write certain class of applications (ex: chat applications). Newer methodologies had to be invented (Firebase, Supabase, Phoenix) to handle these use cases.

### Programming Models

On client-side programming, once expensive operations were hardware accelerated, the CPU became *less* utilized. Another observation was that in the world of microservices and connected devices, the biggest bottleneck is the network IO across the internet.

**Rise of [[JavaScript]]** - both the server and UI-programming - made us realize that a single threaded environment is sufficient to write complex application. React-Native is a good example of this - once only the pure business logic is distilled into it, JavaScript is an efficient enough programming language.

This isn't without pitfalls. One can CPU-lock a javascript event loop (by executing an expensive regular expression). There are other programming models that one needs to utilize (web workers). However, these pitfalls are seldom faced and there are valid workarounds to these problems.

> [!note]

> (There are other programming languages and models - such as [[Go]] and [[Elixir]] - that became as popular as [[JavaScript]])

### Single-core performance became less of a concern

As previously mentioned, Our computing devices got faster over time and we weren't bound by the single-core performance.

Moreover, a lot of problems are naturally parallel - running multiple processes, having multiple tabs on a browser. Google Chrome with 100 tabs exhausting our computer is the first world problem we all face, but Moore's law wouldn't eliminate this problem anyway.

## There are areas that still hurts

> [!TODO] Finish this section

* Simulation - I have no clue how how HPC simulations that would require a lot of hard-to-parallelize code would run (ex: fluid dynamics).

* Certain games - Cities: Skyline, Civilization, large simulation / strategy games - have core simulation logic that is single threaded. These games suffer as the game state becomes larger.

* Though, I'm curious if these games can benefit from the advancements in the modern AI.